Sonair's first-ever whitepaper, “Beyond sight,” dives deep into why multisensory perception is the future of autonomy and exactly how Sonair’s 3D ultrasonic sensing tech is bringing a new dimension of safety and reliability to autonomous machines. Download it here.

Cameras and LiDAR have dominated robotic perception for years. But they’re not enough.

If you’ve ever watched an autonomous robot stop dead in its tracks because of a shadow, a reflective surface, or poor lighting, you’ve seen the problem firsthand. In warehouses, factories, and outdoor environments, machines need to perceive the world beyond what the eye - or a laser - can see.

These flaws are not trivial; they pose serious safety risks to humans, objects, and other moving machines.

This is why we built ADAR (acoustic detection and ranging).

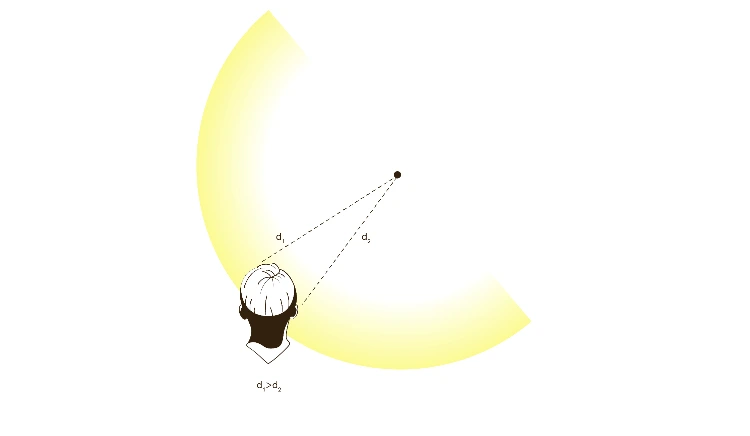

The technology behind echolocation has been perfected by nature for millions of years, yet it’s missing from the world of robotics. Until now.

Sonair's whitepaper, “Beyond sight,” dives deep into why multisensory perception is the future of autonomy—and how Sonair’s 3D ultrasonic sensing tech is bringing a new dimension of safety and reliability to autonomous machines.

It's authored by Alfonso Rodríguez-Molares (PhD in Signal Processing). He has published over 25 peer-reviewed articles, amassing 1545 citations to date, and created the Ultrasound Toolbox (USTB) - an open-source platform widely embraced by the ultrasound research community.

What’s inside?

Download the whitepaper (free)

What is the angular resolution of your sensor?

This concept is not easily definable with ADAR because a pulse is not sent out in a discrete angle. If you are familiar with LiDARs, angular resolution is the angular distance (in degrees) between distance measurements of 2 beams.

This does not apply to ADAR because it does not emit beams.The angular precision is 2° straight ahead and 10° to the sides. The sensor is able to distinguish between multiple detected objects, if the objects are separated by more than 2 cm in range relative to the sensor or by more than 15° from each other.If the two objects are positioned closer than 2 cm or 15° from each other, they will be detected as 1 object.

Precision is a measure of the statistical deviation of repeated measurements on a single object’s position.

What is the maximum of points you have in the point cloud?

The maximum number of points is very rarely a limitation to the sensor’s performance, because the total number of points needed to fully sense a scene is low. The ADAR technology reports 1 point per surface on any object, making the total number of points low. This is opposite to what one might be used to from LiDARs.

The relative sparsity of the point cloud is a fundamental feature of sound-based sensing, but this is not a sensor limitation as the point cloud will always contain at least 1 point per object within line of sight from the sensor.

Can the sensor distinguish between humans and objects among detected obstacles?

ADAR does not do object classification. The sensor is for people and object detection.