When selecting sensors for autonomous robots, you're not just making a hardware choice – you're deciding how your machines perceive and interact with the world around them. Each sensor technology carries its own strengths and limitations, offering varying levels of safety, affordability, and efficiency.

This guide provides a concise comparison of key sensor technologies for people and obstacle detection – Sonair’s ADAR (acoustic detection and ranging) ultrasonic sensors, 2D and 3D LiDAR (light detection and ranging), 2D and 3D cameras, and RADAR (radio detection and ranging) – helping you navigate their traits and applications and determine which option or combination is right for your use case. Whether you're optimizing for safety, cost, or versatility, this comparison will empower you to make informed decisions.

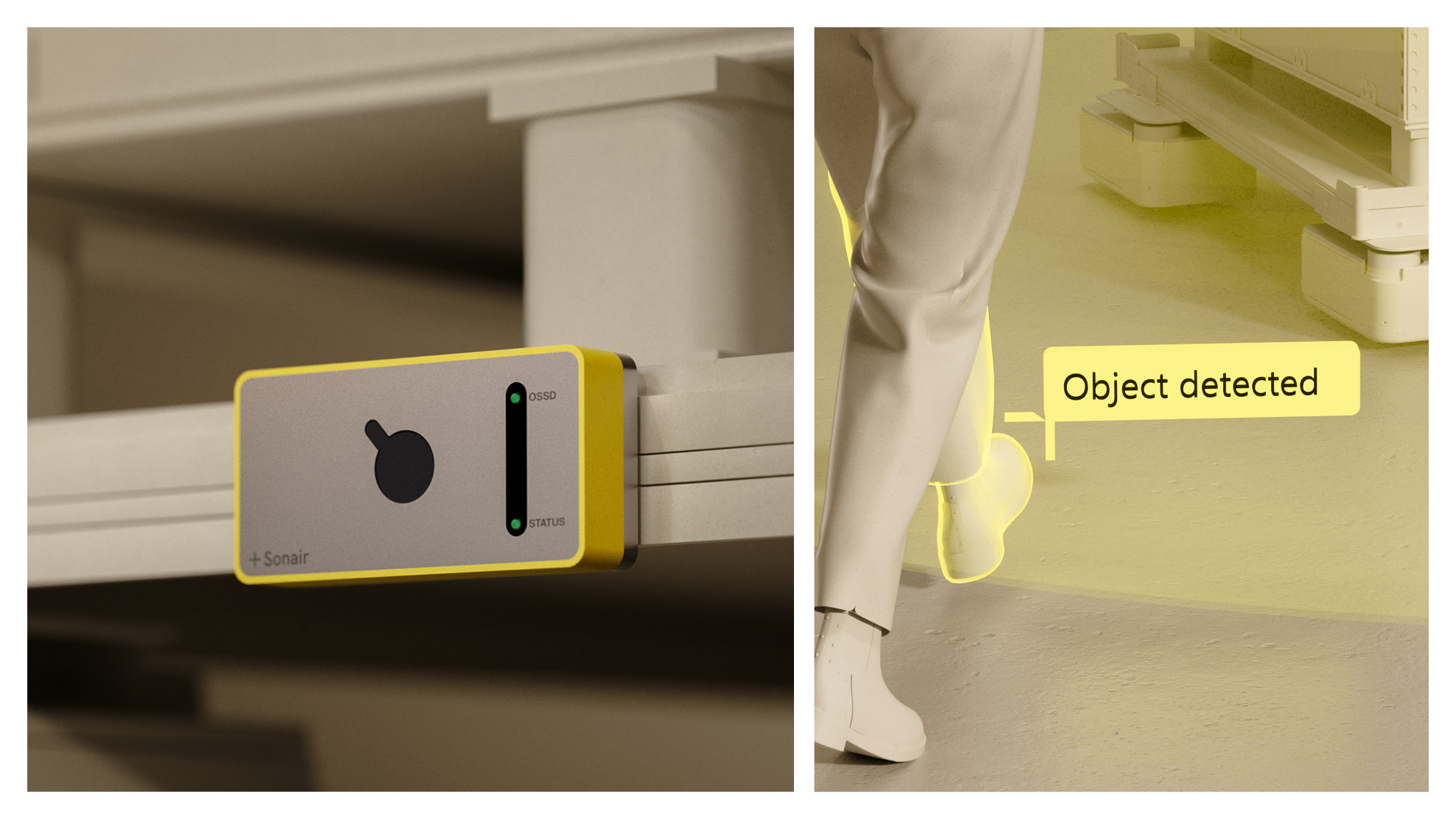

Sonair’s ADAR ultrasonic sensors are designed to make robots safer, more affordable, and more efficient. ADAR thrives in dynamic environments, delivering safe 3D human and obstacle detection without being blinded by light or compromising privacy. Compact yet powerful, ADAR promises cost-effective solutions that have the potential to redefine sensing technology – not only for AMRs but also for static robot/automation cells acting as virtual safeguarding.

When to use: ADAR is ideal for applications requiring high safety standards and cost efficiency – particularly in dynamic environments or static automation cells requiring full 3D field coverage. As an additional advantage, the privacy protection that ADAR provides makes it particularly suitable for sensitive environments where cameras may pose concerns.

2D LiDAR is one of the most mature solutions in robotics sensing. It provides real-time obstacle detection on a single plane, making it ideal for flat surfaces like warehouse floors. While affordable 2D LiDAR units exist, finding affordable safety-rated options can be difficult, with well-known brands’ safety LiDARs costing upwards of USD $4,000. It’s perfect for applications where simplicity outweighs complexity – think highly predictable industrial environments without 3D depth perception or human-robot interaction requirements. 2D LiDARs are not suited for environments with glass walls and reflective surfaces (for example, many modern hospitals, airports, and offices).

When to use: Best suited for static environments with simple layouts where 2D sensing is sufficient, and where competitive cost is not a primary concern.

3D LiDAR creates detailed point clouds – essentially, a 3D map of dots that precisely captures the shape and layout of the robot’s surroundings – allowing for accurate navigation and object recognition. With high accuracy and depth perception, it excels in environments requiring precise navigation. However, no safety-rated 3D LiDARs are currently available, which limits its applicability in certain use cases and rules them out as a singular solution in environments in which safety is critical. Well-known brands are typically expensive, making it an incompatible option within tight budgets.

When to use: Ideal for static industrial settings requiring high-resolution mapping where competitive cost is not a primary concern.

The case for 2D cameras is their ability to capture rich visual data and their affordability, which has driven large-scale adoption over time. However, while they have the functionality to handle basic object recognition, 2D cameras lack depth perception for tasks demanding spatial awareness. They also require significant computational resources for real-time applications. No safety-rated cameras are currently available, so they often need to be combined with additional sensors.

When to use: Suitable for tasks requiring visual classification but not safe detection.

3D cameras add depth perception to visual imagery, making them ideal for detailed scene understanding. While versatile, they demand significant computational resources at the edge, requiring significant processing power for real-time applications. In terms of functionality, 3D cameras’ have field of view (FoV) limitations, requiring trade-offs in mounting position to balance near-floor and high-air visibility. Objects may fall outside the FoV as the robot approaches.

When to use: Best suited for retail robotics or service robots requiring object recognition alongside spatial awareness. However, computational demands and mounting considerations should be accounted for in dynamic environments.

3D radar uses radio waves to detect objects’ distance and velocity, making it reliable in adverse weather conditions like rain or fog. However, radar has some limitations, such as its limited ability to separate closely spaced objects, which can make it harder to identify individual obstacles. In environments with reflective materials, radar may create ghost figures or misinterpret objects. It can also detect through walls, which might cause false negatives in certain applications. Finally, it operates in regulated frequency bands and may require location-specific licenses or configuration adjustments to comply with local regulations.

When to use: Best suited for outdoor environments requiring long-range obstacle detection, though users should be aware of potential issues with object separation and regional regulatory requirements.

The table below provides a quick-reference comparison of key sensor features across six technologies. Use this matrix to evaluate which options align best with your specific requirements, whether it’s precision, safety, cost-effectiveness, or environmental resilience.

*Note: There is only one safety-rated radar sensing solution currently available, which does not have a small external form factor.

There’s rarely a single sensor that meets every need in a complex robotics environment. Combining the strengths of multiple sensors can create more holistic solutions that overcome individual limitations, particularly in dynamic settings involving AMRs. However, given its 3D safety capabilities, cost-effectiveness, and resilience to environmental factors like lighting, ADAR is often an ideal foundation upon which to build more comprehensive robotics sensing solutions.

For example:

By combining technologies like ADAR with cameras or LiDAR, businesses can build sensor packages tailored to their specific needs to create robust solutions that balance cost, precision, and versatility.

Robotics sensing technology has evolved significantly, offering diverse solutions tailored to different needs. It’s clear that our ADAR ultrasonic sensors stand out as a versatile option that combines safety, affordability, and efficiency – making it a potential game-changer for dynamic environments. Ready to explore how ADAR can transform your robotics vision? Contact us today to learn more about our evaluation kit and see how Sonair’s groundbreaking technology can elevate your operations.

What is the angular resolution of your sensor?

This concept is not easily definable with ADAR because a pulse is not sent out in a discrete angle. If you are familiar with LiDARs, angular resolution is the angular distance (in degrees) between distance measurements of 2 beams.

This does not apply to ADAR because it does not emit beams.The angular precision is 2° straight ahead and 10° to the sides. The sensor is able to distinguish between multiple detected objects, if the objects are separated by more than 2 cm in range relative to the sensor or by more than 15° from each other.If the two objects are positioned closer than 2 cm or 15° from each other, they will be detected as 1 object.

Precision is a measure of the statistical deviation of repeated measurements on a single object’s position.

What is the maximum of points you have in the point cloud?

The maximum number of points is very rarely a limitation to the sensor’s performance, because the total number of points needed to fully sense a scene is low. The ADAR technology reports 1 point per surface on any object, making the total number of points low. This is opposite to what one might be used to from LiDARs.

The relative sparsity of the point cloud is a fundamental feature of sound-based sensing, but this is not a sensor limitation as the point cloud will always contain at least 1 point per object within line of sight from the sensor.

Can the sensor distinguish between humans and objects among detected obstacles?

ADAR does not do object classification. The sensor is for people and object detection.