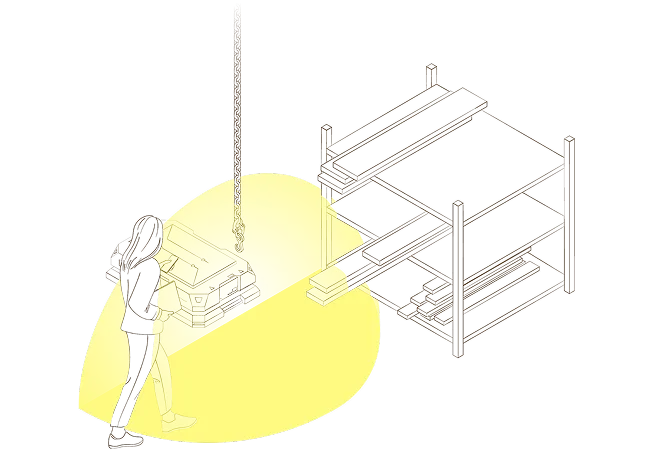

ADAR cuts your sensor costs by up to 50% — without compromising on safety or performance.

Full vertical views at 180 x 180 degrees at a four meter range.

Pre-configure 128 safety zones and constantly monitor one stop zone and two warning zones.

Go from a flat 2D view to full 3D to capture all spatial data instantly.

ADAR emits sound and captures echoes to detect objects in 3D, enabling perception in darkness, dust, or glare where cameras and LiDAR struggle.

%201.webp)

%201.webp)

Using a fixed ultrasonic array with no moving parts, ADAR covers a 180° x 180° field of view. This gives robots robots omnidirectional awareness with centimeter-level precision.

At the heart of ADAR are PMUTs (Piezoelectric Micromachined Ultrasonic Transducers)—tiny, high-performance ultrasonic emitters and receivers fabricated using MEMS technology.

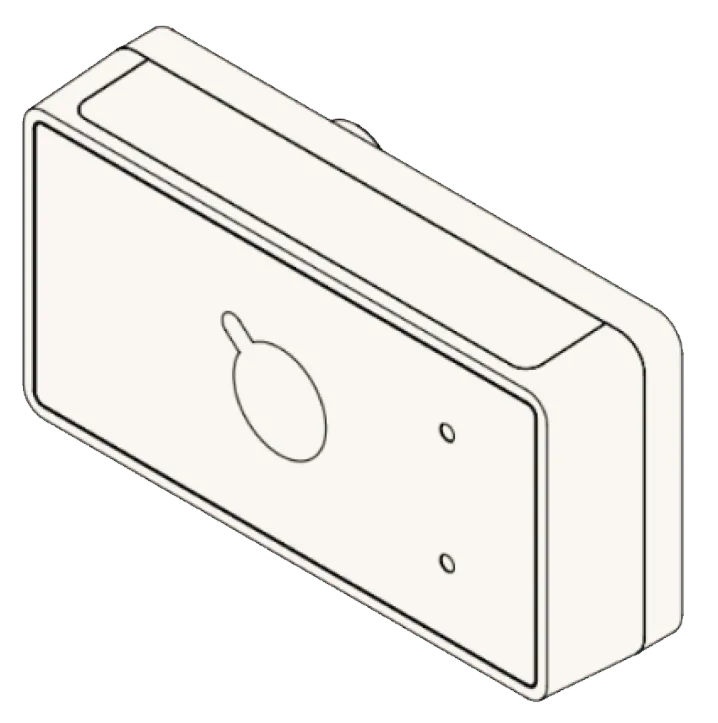

Size

Height 54 mm, width 104 mm, depth 25 mm

Weight

200 g

Detection range

0-4 m

Detection close range

0-5 cm (obstacle is detected but position not reported)

Range precision

± 2 cm

Angular precision

± 2° (center), ± 10° (edge)

Detection capability

Ø30 mm cylinder up to 3 m

Allow multiple ADAR devices inside the detection range without crosstalk

Yes

Interfaces

Safe output: 1 OSSD

Pair- Protective zone

Safe inputs: 11 static control inputs

- Zone select

Data interface: 1 Ethernet

- 3D point cloud (COAP over UDP/IP)

- Configuration (TCP/IP)

Number of simultaneously monitored zones

3 (1x protective zone, 2x warning zones per preset)

Number of zone presets

128

Ultrasonic frequency

70-85 kHz

Response time

112 ms

Power consumption

Max 5 W

Supply voltage

24 V DC (18 V to 28 V DC) (SELV/PELV)

Ingress protection (IP)

IP64

Temperature

-10 to +50 Celsius

(operating temperature)

Field of view (FoV)

180 x 180 degrees

Frame rate

18 Hz

Safety parameters

The product is designed with the following intended safety functionality. All details are pending.

Safety integrity level:

SIL2 (IEC 61508)

Performance level:

PL d, Cat. 2 (EN ISO 13849)

Revolutionary MEMS transducers

Our tiny, custom-made PMUTs enable the magic of ADAR

Quad-core ARM SoC

The powerhouse behind ADAR's real-time 3D awareness

Rust

A trailblazing programming language for embedded development

ROS2

The open source robotic middleware that delivers ADAR's 3D point cloud output

OSSD

the output signal switching device is what instructs the robot to stop when safetyevents occur

%201.webp)

Ultrasound is sound at frequencies that are inaudible to human ears. Well-known applications are underwater (SONAR) and non-invasive medical imaging. Ultrasound is also in use today for reliable 1D distance measurement when we park our cars.

Sonair is developing a 3D distance sensor which provides autonomous robots with omnidirectional depth sensing. We call this new technology ADAR (acoustic detection and ranging)

What determines the position of the points on an object?

Points appear where the sound waves bounce back to the sensor. This happens from any surface that has a normal pointing to the sensor. Sound is also reflected back by edges and corners. For each surface or edge, the detection is positioned at the point on that surface or edge which is closest to the sensor.

Does the sensor have ignore zones?

Yes, the sensor supports configurable ignore zones. These can be set by the user to prevent detection of known static objects, such as parts of the robot’s frame, ensuring they don’t trigger false positives.

What are the available ROS2 interfaces?

The sensor publishes a point cloud and status message through COAP. We provide a ROS2 driver that receives these messages and publishes a point cloud topic using the ROS2 standard message type: sensor_msgs/msg/PointCloud2. For customers not running ROS2 internally, we also provide a docker image that enables the use of Foxglove for visualization of the point cloud.ADAR does not do object classification. The sensor is for people and object detection.